Handling Large Datasets with Pagination and Cursors in Laravel MongoDB

Last updated on by Farhan Hasin Chowdhury

Modern applications routinely deal with datasets containing millions of records. Whether you're building an e-commerce platform with extensive product catalogs, a social media feed, or an analytics dashboard, you'll eventually face the question of how to display large amounts of data without overwhelming your server or your users. Pagination is the standard solution, but not all pagination methods perform equally as your data grows.

This article explores two approaches to pagination when working with Laravel and MongoDB: offset-based pagination using skip() and limit(), and cursor-based pagination that uses document pointers. You'll learn how each method works internally, why offset pagination degrades at scale, and when cursor-based pagination offers a better alternative. By the end, you'll have practical implementation examples and clear guidance on choosing the right approach for your application.

Offset Pagination: Mechanics and Performance Problems

Offset pagination is the traditional approach most developers learn first. The concept is straightforward: you tell the database to skip a certain number of records and return the next batch. If you want page 5 with 20 items per page, you skip the first 80 records and fetch the next 20.

How Offset Pagination Works

In MongoDB, offset pagination uses skip() to set the starting point and limit() to control how many documents to return. Laravel's Eloquent provides the paginate() method that handles this automatically, or you can use skip() and take() manually for more control.

Here's a basic implementation:

use App\Models\Product; class ProductController extends Controller{ public function index(Request $request) { $page = max((int) $request->input('page', 1), 1); $perPage = 20; $skip = ($page - 1) * $perPage; $products = Product::orderBy('created_at', 'desc') ->skip($skip) ->take($perPage) ->get(); return response()->json($products); }}The skip value calculation is simple: ($page - 1) * $perPage. For page 1, you skip 0 records. For page 2, you skip 20. For page 100, you skip 1,980.

Laravel's built-in paginate() method wraps this logic and adds metadata like total pages and navigation links:

$products = Product::orderBy('created_at', 'desc')->paginate(20);This returns a paginator object with the results plus information about total records, current page, and URLs for previous and next pages.

Why Offset Pagination Fails at Scale

The problem with skip() becomes apparent when you examine what MongoDB does to execute the query. When you call skip(1000000), MongoDB doesn't jump directly to record 1,000,001. It must scan through all one million documents before returning your results. The database reads and discards every skipped document, which means page 10,000 takes dramatically longer than page 1.

Query time grows linearly with the offset value. If page 1 takes 5 milliseconds, page 1,000 might take 500 milliseconds, and page 10,000 could take several seconds. This degradation happens regardless of how well you've indexed your collection, because the skip operation itself requires traversing documents.

You can observe this behavior using MongoDB's explain feature:

db.products.find().sort({ created_at: -1 }).skip(1000000).limit(20).explain("executionStats")The docsExamined field in the output will show over one million documents examined, even though you're only returning 20.

The Count Problem

Offset pagination usually displays "Page X of Y" in the interface, which requires knowing the total number of documents in the collection. Getting this count on large collections is expensive. MongoDB must scan the entire collection to return an accurate count, and this operation doesn't benefit from indexes the way filtered queries do.

Several strategies can help mitigate the cost:

- Cache the count: store the total count in a separate location and refresh it periodically rather than calculating it on every request

- Use estimated counts: MongoDB's

estimatedDocumentCount()returns an approximate count much faster than an exact count - Avoid displaying totals: show "Next" and "Previous" buttons without revealing the total number of pages

// Fast estimated count$estimatedCount = DB::connection('mongodb') ->collection('products') ->raw(function ($collection) { return $collection->estimatedDocumentCount(); });When Offset Pagination Still Makes Sense

Despite its limitations, offset pagination works well in certain situations:

- Small to medium datasets: collections under 100,000 records rarely show noticeable performance issues

- Admin panels: internal tools where convenience outweighs performance concerns, and datasets are often filtered to manageable sizes

- Arbitrary page access: when users need to jump directly to page 50 or page 200, offset pagination is the only practical option

If your application fits these criteria, offset pagination's simplicity makes it the right choice. The performance problems only arise when users navigate to deep pages in large collections.

Cursor Pagination: The Scalable Alternative

Cursor-based pagination takes a different approach. Instead of counting how many records to skip, you use a pointer to the last record you saw and ask for everything that comes after it. This technique is also known as keyset pagination or seek pagination.

What is Cursor-Based Pagination

The cursor is a value that uniquely identifies a position in your sorted result set. When you request the next page, you pass this cursor, and the database queries for documents where the sort field is greater than (or less than, depending on direction) the cursor value.

Consider a timeline of posts sorted by creation date. Instead of saying "skip the first 100 posts," you say "give me posts created after this timestamp." The database can use an index to jump directly to that position without scanning earlier documents.

How Cursor Pagination Works

The flow typically follows this pattern:

- First request: fetch the first N records and note the cursor value of the last record

- Subsequent requests: fetch N records where the sort field is greater than the cursor value

- Repeat: each response includes a new cursor for the next page

The cursor is typically the _id field, a timestamp like created_at, or a compound value when sorting by non-unique fields.

// First page - no cursor needed$products = Product::orderBy('_id', 'asc') ->limit(20) ->get(); $lastId = $products->last()->_id; // Second page - use the cursor$products = Product::where('_id', '>', $lastId) ->orderBy('_id', 'asc') ->limit(20) ->get();Because _id is always indexed in MongoDB, this query executes with consistent performance regardless of how deep into the dataset you've navigated.

Why Cursors are Efficient

The key difference is how the database executes the query. With a cursor condition like where('_id', '>', $lastId), MongoDB uses the index to jump directly to the starting point. There's no scanning or discarding of documents. The query examines only the documents it returns.

This gives cursor pagination O(1) time complexity for any "page." Whether you're fetching the equivalent of page 1 or page 10,000, the query takes the same amount of time. The only factor affecting performance is the number of documents returned, not the position in the dataset.

The Trade-offs

Cursor pagination has limitations that affect user interface design:

- No arbitrary page jumping: users can only move forward or backward sequentially, not jump to page 50 directly

- No "Page X of Y" display: without a skip-based system, there's no concept of numbered pages

- Cursor management: clients must track the cursor between requests

These constraints make cursor pagination unsuitable for interfaces that require traditional page numbers. However, many modern applications don't need numbered pages at all. Infinite scroll interfaces, "Load more" buttons, and API pagination for mobile apps all work naturally with cursor-based approaches.

Ideal Use Cases

Cursor pagination excels in these scenarios:

- Infinite scroll: social media feeds, news sites, and content discovery interfaces where users scroll continuously

- Mobile and single-page applications: APIs that serve data to frontends with "Load more" functionality

- Real-time feeds: activity logs, notifications, and event streams where new content arrives frequently

- Large-scale APIs: public APIs where preventing deep pagination attacks is important

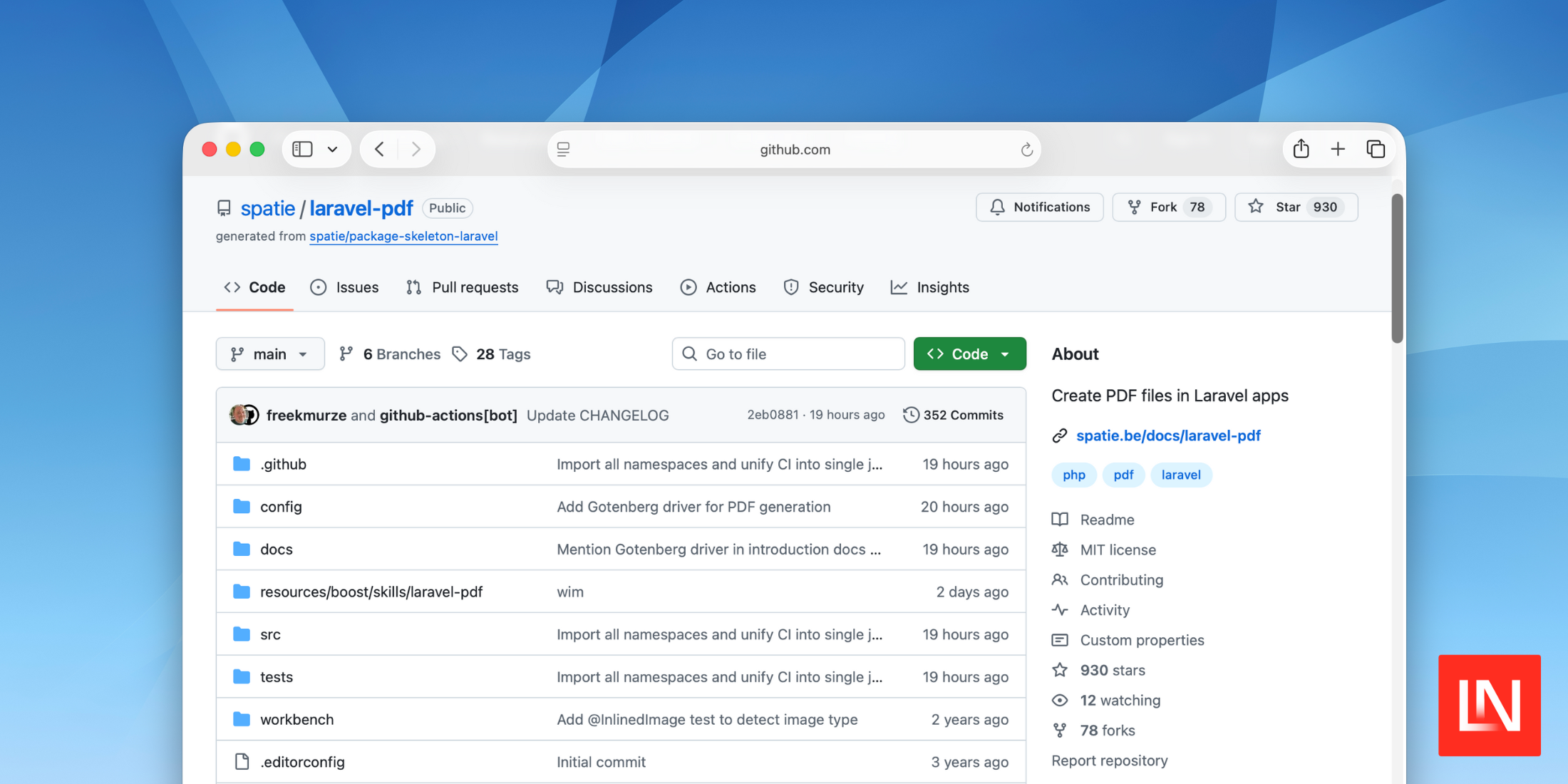

Implementation with Laravel MongoDB

Laravel provides built-in support for cursor pagination through the cursorPaginate() method, which works with the Laravel MongoDB package. This section covers both the built-in method and custom implementations for more complex requirements.

Laravel's cursorPaginate Method

Available since Laravel 8, cursorPaginate() handles cursor encoding, decoding, and navigation link generation automatically:

use App\Models\Product; class ProductController extends Controller{ public function index() { $products = Product::orderBy('_id')->cursorPaginate(15); return response()->json($products); }}The response includes cursor metadata that clients can use for navigation:

{ "data": [...], "path": "http://example.com/products", "per_page": 15, "next_cursor": "eyJfaWQiOiI2NTBhYjEyMzQ1NiIsIl9wb2ludHNUb05leHRJdGVtcyI6dHJ1ZX0", "next_page_url": "http://example.com/products?cursor=eyJfaWQiOiI2NTBhYjEyMzQ1NiIsIl9wb2ludHNUb05leHRJdGVtcyI6dHJ1ZX0", "prev_cursor": null, "prev_page_url": null}The cursor is a base64-encoded JSON object containing the last document's sort field values. Laravel automatically decodes this on subsequent requests and builds the appropriate query.

For API responses, you might format the output differently:

public function index(Request $request){ $products = Product::orderBy('created_at', 'desc') ->orderBy('_id', 'desc') ->cursorPaginate(20); return response()->json([ 'products' => $products->items(), 'meta' => [ 'next_cursor' => $products->nextCursor()?->encode(), 'prev_cursor' => $products->previousCursor()?->encode(), 'has_more' => $products->hasMorePages(), ], ]);}Custom Cursor Pagination

In some cases you need more control than cursorPaginate() provides. Perhaps you're working with complex sorting, need a specific cursor format for your frontend, or want to integrate with existing API conventions.

Here's a basic custom implementation:

use App\Models\Order;use Illuminate\Http\Request; class OrderController extends Controller{ public function index(Request $request) { $perPage = 20; $cursor = $request->input('cursor'); $query = Order::orderBy('_id', 'asc'); if ($cursor) { $decodedCursor = base64_decode($cursor); $query->where('_id', '>', $decodedCursor); } // Fetch one extra to check if more pages exist // then remove it before returning $orders = $query->limit($perPage + 1)->get(); $hasMore = $orders->count() > $perPage; if ($hasMore) { $orders->pop(); } $nextCursor = $hasMore ? base64_encode($orders->last()->_id) : null; return response()->json([ 'orders' => $orders, 'next_cursor' => $nextCursor, 'has_more' => $hasMore, ]); }}The trick of fetching one extra document ($perPage + 1) lets you determine whether more pages exist without running a separate count query.

Handling Compound Sort Orders

When sorting by non-unique fields like created_at, multiple documents might share the same value. This creates ambiguity about which document should come next. The solution is to add a unique field as a tie-breaker, typically _id.

public function index(Request $request){ $perPage = 20; $cursor = $request->input('cursor'); $query = Order::orderBy('created_at', 'desc') ->orderBy('_id', 'desc'); if ($cursor) { $decoded = json_decode(base64_decode($cursor), true); $query->where(function ($q) use ($decoded) { $q->where('created_at', '<', $decoded['created_at']) ->orWhere(function ($q2) use ($decoded) { $q2->where('created_at', '=', $decoded['created_at']) ->where('_id', '<', $decoded['_id']); }); }); } $orders = $query->limit($perPage + 1)->get(); $hasMore = $orders->count() > $perPage; if ($hasMore) { $orders->pop(); } $nextCursor = null; if ($hasMore && $orders->isNotEmpty()) { $lastOrder = $orders->last(); $nextCursor = base64_encode(json_encode([ 'created_at' => $lastOrder->created_at->toISOString(), '_id' => (string) $lastOrder->_id, ])); } return response()->json([ 'orders' => $orders, 'next_cursor' => $nextCursor, 'has_more' => $hasMore, ]);}The compound cursor condition says: "Give me documents where created_at is less than the cursor's timestamp, OR where created_at equals the cursor's timestamp AND _id is less than the cursor's ID." This ensures consistent ordering even when timestamps collide.

Choosing the Right Approach

The decision between offset and cursor pagination depends on your dataset size, user interface requirements, and performance constraints.

Comparison Overview

| Factor | Offset pagination | Cursor pagination |

|---|---|---|

| Performance at scale | Degrades with offset size | Consistent regardless of position |

| Implementation complexity | Simple | Moderate |

| Page jumping | Supported | Not supported |

| "Page X of Y" display | Supported | Not supported |

| Suitable dataset size | Under 100K records | Any size |

Choose Offset Pagination when

- Your dataset is small to medium (under 100,000 records)

- Users need to jump to specific pages directly

- You need to display "Page 3 of 47" in the interface

- You're building admin panels where convenience matters more than performance

- The filtered result set is always manageable, even if the total collection is large

Choose Cursor Pagination when

- Your dataset is large or growing without bounds

- You're building infinite scroll or "Load more" interfaces

- You're creating APIs for mobile applications or single-page apps

- Performance consistency matters more than random page access

- You want to prevent deep pagination attacks on public APIs

Hybrid Approaches

Some applications benefit from combining both methods:

- Offset for early pages, cursor for deep navigation: allow numbered pages for the first 10-20 pages, then switch to cursor-based "Load more" for users who want to go deeper

- Cached counts with cursor pagination: use cursor pagination for data fetching but maintain a cached total count that refreshes periodically, giving users some sense of the dataset size without the performance hit

Performance Tips

Beyond choosing the right pagination strategy, several optimizations can improve performance for both approaches.

Index Your Cursor Fields

Cursor pagination only works efficiently if the fields you're using for cursors are indexed. For queries that sort by created_at and _id, create a compound index:

use Illuminate\Support\Facades\Schema;use MongoDB\Laravel\Schema\Blueprint; Schema::create('orders', function (Blueprint $collection) { $collection->index(['created_at' => -1, '_id' => -1]);});MongoDB automatically creates an ascending index on _id for every collection. If you're sorting only by _id in ascending order, the built-in index handles it. But for compound sorts like created_at descending then _id descending, you need the explicit compound index shown above. MongoDB can't combine separate single-field indexes efficiently for this query pattern.

Without this index, MongoDB falls back to scanning documents even with cursor pagination. The index order matters: it should match your sort order exactly. If you sort by created_at descending and then _id descending, your index should use the same directions.

For filtered queries that also paginate, consider compound indexes that include filter fields first. If you frequently query orders by status and paginate by date, an index on ['status' => 1, 'created_at' => -1, '_id' => -1] lets MongoDB satisfy both the filter and the cursor condition efficiently.

Use Projections

Fetch only the fields your application needs. Large documents with nested arrays or embedded objects take longer to transfer and consume more memory:

$products = Product::select(['name', 'price', 'category', 'created_at']) ->orderBy('_id') ->cursorPaginate(20);This reduces network overhead and speeds up serialization on both the database and application sides. The difference becomes significant when documents contain large text fields, arrays with many elements, or deeply nested structures. A document that's 50KB when fully loaded might only be 2KB when you select the fields you actually display.

Cache Strategically

For frequently accessed first pages, consider caching:

$firstPage = Cache::remember('products:first_page', now()->addMinutes(5), function () { return Product::orderBy('created_at', 'desc') ->limit(20) ->get();});First pages often receive the most traffic, so caching them reduces database load significantly. Deeper pages are accessed less frequently and can be fetched fresh.

If you display total counts, cache them separately with an appropriate TTL rather than counting on every request:

$totalProducts = Cache::remember('products:count', now()->addHours(1), function () { return Product::count();});Consider invalidating cached first pages when new documents are inserted, since stale first pages are more noticeable to users than stale deep pages. You can use cache tags or event listeners to handle this:

// In your model or observerprotected static function booted(){ static::created(function () { Cache::forget('products:first_page'); });}Conclusion

Pagination is a fundamental pattern for handling large datasets, but the implementation you choose has real consequences for performance and user experience. Offset pagination with skip() and limit() is simple to implement and works well for smaller datasets or admin interfaces where arbitrary page access matters. However, its performance degrades linearly as users navigate deeper into large collections.

Cursor pagination maintains consistent performance regardless of position by using indexed lookups instead of document scanning. Laravel MongoDB supports both approaches out of the box, with paginate() for offset-based pagination and cursorPaginate() for cursor-based pagination.

When deciding between them, consider your dataset size and growth trajectory, whether users need to jump to arbitrary pages, and how critical performance consistency is for your use case. For large-scale applications, cursor pagination is usually the better choice. For smaller datasets with traditional pagination UI requirements, offset pagination remains practical.

Whatever approach you choose, remember to index your sort fields, use projections to minimize data transfer, and benchmark with production-scale data before committing to a strategy. Performance characteristics that seem acceptable with 10,000 records often look different with 10 million. If you're building a new feature that handles large or growing datasets, start with cursorPaginate() and save yourself the migration headache later.

Backend engineer and technical author teaching millions through code and content.