Laravel's streaming response feature enables efficient handling of large datasets by sending data incrementally as it's generated, reducing memory usage and improving response times.

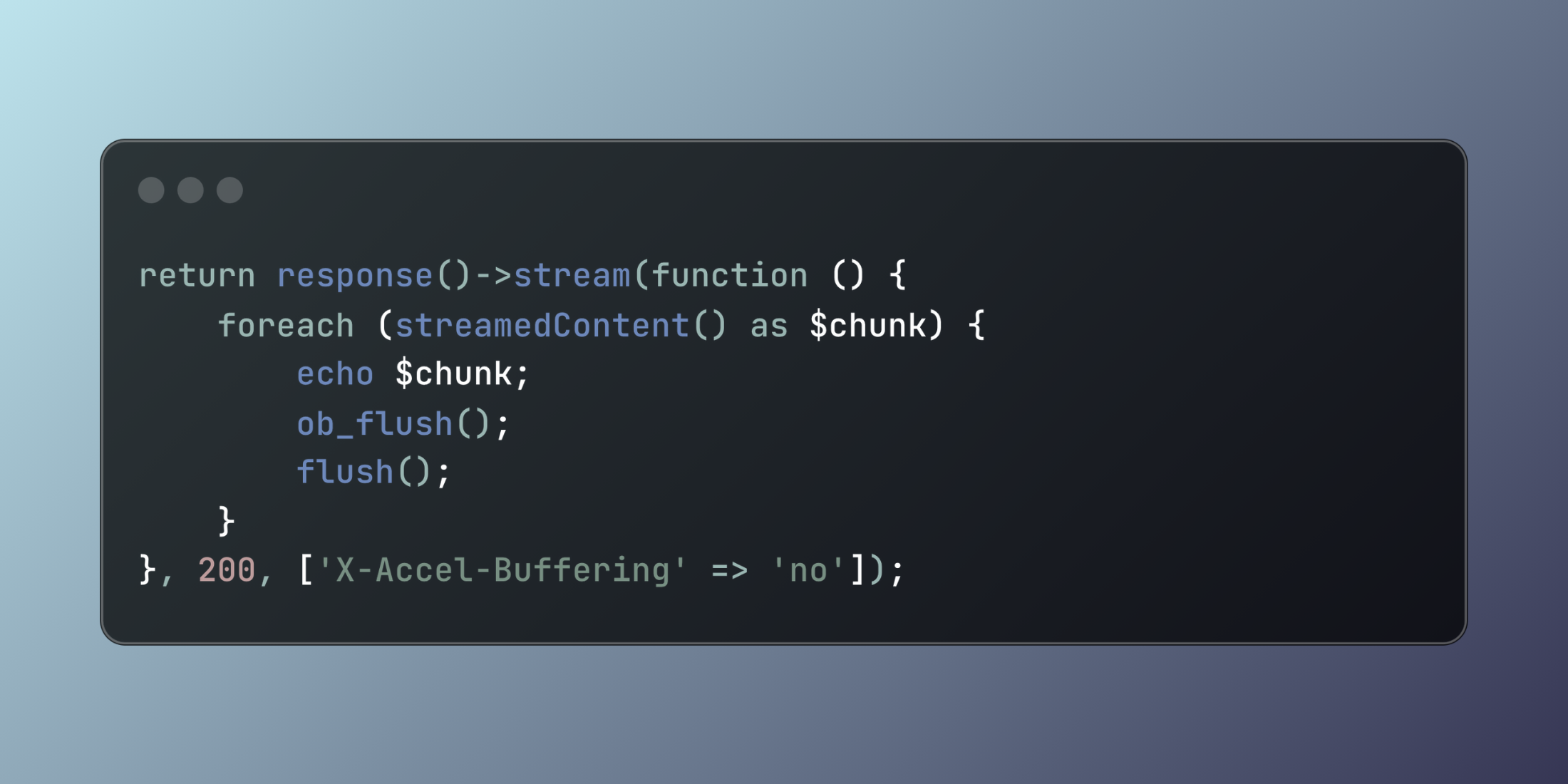

Route::get('/stream', function () { return response()->stream(function () { foreach (range(1, 100) as $number) { echo "Line {$number}\n"; ob_flush(); flush(); } }, 200, ['Content-Type' => 'text/plain']);});Let's explore a practical example of streaming a large data export:

<?php namespace App\Http\Controllers; use App\Models\Order;use Illuminate\Support\Facades\DB; class ExportController extends Controller{ public function exportOrders() { return response()->stream(function () { // Output CSV headers echo "Order ID,Customer,Total,Status,Date\n"; ob_flush(); flush(); // Process orders in chunks to maintain memory efficiency Order::query() ->with('customer') ->orderBy('created_at', 'desc') ->chunk(500, function ($orders) { foreach ($orders as $order) { echo sprintf( "%s,%s,%.2f,%s,%s\n", $order->id, str_replace(',', ' ', $order->customer->name), $order->total, $order->status, $order->created_at->format('Y-m-d H:i:s') ); ob_flush(); flush(); } }); }, 200, [ 'Content-Type' => 'text/csv', 'Content-Disposition' => 'attachment; filename="orders.csv"', 'X-Accel-Buffering' => 'no' ]); }}Streaming responses enable efficient handling of large datasets while maintaining low memory usage and providing immediate feedback to users.