We have just launched Custom Domains V2, and I’m going to share all the technical details with you. The highs and the lows, what I’ve learned, and how to do it yourself. The end result is a highly available and globally fast infrastructure. Our customers love it, and so do we. But let’s go back to the start of this story.

I run a simple, ethical alternative to Google Analytics. One of the most common things people hate about analytics, outside of them being too complex to understand, is that their scripts get blocked by ad-blockers. Understandably, Google Analytics gets blocked by every single ad-blocker on the planet. Google has so many privacy scandals, and a lot of people are scared to send them their visitors’ data. But Fathom is privacy-focused, stores zero customer data, and therefore shouldn’t be treated the same way. So we had to introduce a feature called “custom domains” where our users could point their own subdomain (e.g. wealdstoneraider.ronnie-pickering.com) to our Laravel Vapor application. If you’re not familiar with Vapor, it’s a product built by the Laravel team that allows you to deploy your applications to serverless infrastructure on AWS (I actually have a course on it, that’s how much I love Vapor).

AWS’ services don’t make this custom domain task very easy. If you’re using the Application Load Balancer (ALB), you’re limited to 25 different domains. And if you’re using AWS API Gateway, you get a few hundred, but AWS won’t let you go much higher with that limit. So we were in a position where we really needed to think out of the box.

Attempt 1: Vapor with support from Forge

The first thing I did to try and implement custom domains was to create one environment within Vapor for each custom domain. An environment in Vapor is something like “production” or “staging” typically, but I decided otherwise. Instead of that, we would have “miguel-piedrafita-com” and “pjrvs-com” as our environments. It sounds absolutely stupid in hindsight, but it worked at the time and I thought it was pretty cutting edge.

I built the whole thing out by looking into the Vapor source code on GitHub and playing with the API. I’m not going to share my code because it’s pointless now, but it consisted of the following steps:

- User enters their custom domain in the frontend

- We run a background task to add their domain to Route53 via Vapor

- We initiate an SSL certificate request via Vapor

- We email the CNAME changes required for the SSL certificate

- The user makes the changes

- We check every 30 minutes to see if the SSL certificate has been issued by AWS

- When the certificate is valid, we send an API request to Forge (another Laravel service for deployment) which packages up a separate Laravel application and deploys it to Vapor, as a new environment.

- Forge then pings our Vapor set-up and says “We’re all done here”

- Vapor then checks the subdomain. Woohoo, it works, so we email the user telling them it’s ready to go

This never made it to production, and I shamefully deleted all of the code.

Attempt 2 – Forge proxy servers

After I realized that the first attempt just wasn’t going to scale, I went back to the drawing board. I accepted that I would have to have a proxy layer and, to Chris Fidao’s joy, I added EC2 servers to our infrastructure set-up.

I provisioned a load balancer via Forge and put 2 servers inside it. The plan was that I would create an SSL certificate & add a site to each server, via Forge’s API, so if one server went offline, the other would take it’s place. The whole thing was going to run on NGINX and our only real “limitation” would be Forge’s API rate limits. Easy peasy.

So I had this all built out. It was unbelievably easy. Forge’s API is incredible. So I did what any avid Twitter user would do, I shared where I was with everything.

I was pretty proud of myself. And then Alex Bouma strolled by, threw a grenade in and walked off.

And then Mattias Geniar jumped in (it was his article, after all) and then Owen Conti (who had apparently already told me that this was the way go to back in November 2019) and then Matt Holt (creator of Caddy) jumped in and, boom, that was my finished product gone. Thanks guys.

Now I know what you’re thinking… There’s an awful lot of “not shipping” going on here. I don’t normally get caught up in perfectionism these days but I wanted to include the best possible solution as a video in my Serverless Laravel course. So if there was a better solution available, I had a duty to my course members and Fathom customers to make sure I knew about it.

And this is where Caddy entered my life.

Attempt 3: Highly available Caddy proxy layer

I wish I could travel back in time to 2019 and tell myself about this solution. Maybe it was the timing? After all, Caddy 2 has only just been released when I saw Alex’s tweet.

Caddy 2 is an open-source web server with automatic HTTPS. So you don’t have to worry about managing virtual hosts or SSL certificates yourself, it automatically does it for you. It’s so much simpler than what we’re used to.

So you’ve heard all the failed attempts, let’s get to how we solved things.

Step 1. SSL Certificate Storage

The first thing we needed to do was to create a DynamoDB table. This was going to be our centralized storage. Why did I use DynamoDB? Because I didn’t want certificates stored on a server filesystem. I’m sure we could’ve managed some sort of NAS, but I have no idea how that would’ve worked across regions. Ultimately, I’m familiar with DynamoDB and Matt Holt was kind enough to upgrade the Caddy DynamoDB module to V2 (thanks mate!).

- Open up DynamoDB in AWS

- Create a new table called caddy_ssl_certificates with a primary key named PrimaryKey

- Un-tick the default settings and go with on-demand (no free tier but auto scaling)

- When the table has been created, click the Backups tab and enable Point-in-time Recovery

- And you’re done

Step 2. Create an IAM user

We now needed to create a user with limited access in AWS. We could, in theory, give Caddy an access key with root permissions, but it just doesn’t need it.

- Create a user called caddy_ssl_user

- Tick the box to enable Programmatic Access

- Click Attach existing policies directly

- Click Create policy

- Choose DynamoDB as your service and add the basic permissions, along with limiting access to your table (you can find a video walkthrough in my course if you’re not too sure about this part)

- Boom. Make sure you save the Access key ID and Secret access key

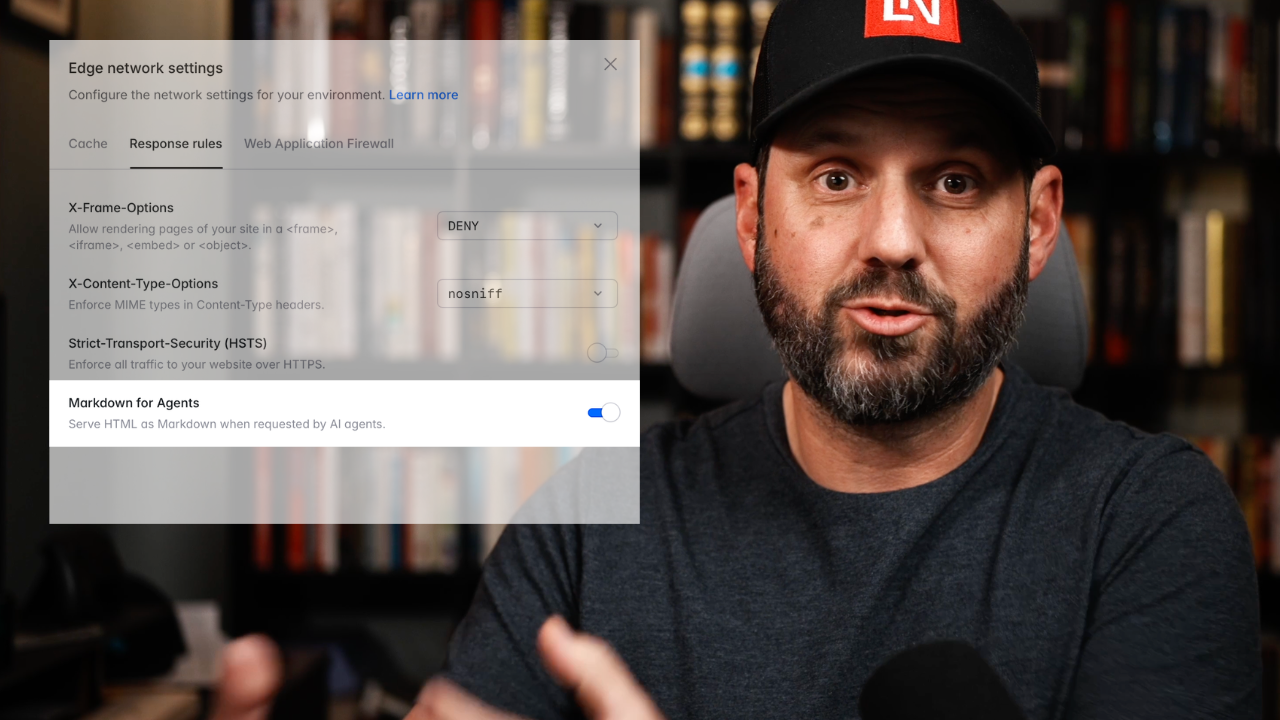

Step 3. Create a route in your application to validate domains

We don’t want any Tom, Dick or Harry to point their domains at our infrastructure and have SSL certificates generated for them. No, we only want to generate SSL certificates for our customers.

For this write-up, I’m going to keep things super simple and hard code it all. If you were going to put this into production, you’d have database existence checks, obviously ;)

routes/web.php

Route::get('caddy-check-8q5efb6e59', 'CaddyController@check');app\Http\ControllersCaddyController.php

<?php namespace App\Http\Controllers; use Illuminate\Http\Request;use Illuminate\Support\Facades\App; class CaddyController extends Controller{ protected static $authorizedDomains = [ 'portal.my-customers-website.com' => true ]; public function check(Request $request) { if (isset(self::$authorizedDomains[$request->query('domain')])) { return response('Domain Authorized'); } // Abort if there's no 200 response returned above abort(503); }}You’ll find out why this endpoint is so important soon.

Step 4. Preparing our Forge Recipe

Did I say Forge? Laravel Forge? You’re damn right I did. We use Forge to hold our proxy servers (thanks Taylor). I’ve got a recipe template for you here, but you’ll need to go through and fill in the gaps (AWS keys, your website etc.). Again, if you’re not confident on this, my video walkthrough goes into all of this in detail:

# Stop and disable NGINXsudo systemctl stop nginxsudo systemctl disable nginx # Grab the bin file I’ve hosted on the Fathom Analytics CDNsudo wget “https://cdn.usefathom.com/caddy” # Move the binary to $PATHsudo mv caddy /usr/bin/ # Make it executablesudo chmod +x /usr/bin/caddy # Create a group named caddysudo groupadd —system caddy # Create a user named caddy, with a writeable home foldersudo useradd —system \ —gid caddy \ —create-home \ —home-dir /var/lib/caddy \ —shell /usr/sbin/nologin \ —comment “Caddy web server” \ caddy # Create the environment filesudo echo ‘AWS_ACCESS_KEY=AWS_SECRET_ACCESS_KEY=AWS_REGION=‘ | sudo tee /etc/environment # Create the caddy directory & Caddyfilesudo mkdir /etc/caddysudo touch /etc/caddy/Caddyfile # Write the config filesudo echo ‘{ on_demand_tls { ask https://your-website.com/caddy-endpoint } storage dynamodb caddy_ssl_certificates} :80 { respond /health “Im healthy!” 200} :443 { tls you@youremail.com { on_demand } reverse_proxy https://your-website.com { header_up Host your-website.com header_up User-Custom-Domain {host} header_up X-Forwarded-Port {server_port} health_timeout 5s }}’ | sudo tee /etc/caddy/Caddyfile sudo touch /etc/systemd/system/caddy.service # Write the caddy service filesudo echo ‘# caddy.service## WARNING: This service does not use the —resume flag, so if you# use the API to make changes, they will be overwritten by the# Caddyfile next time the service is restarted. If you intend to# use Caddys API to configure it, add the —resume flag to the# `caddy run` command or use the caddy-api.service file instead. [Unit]Description=CaddyDocumentation=https://caddyserver.com/docs/After=network.target [Service]User=caddyGroup=caddyExecStart=/usr/bin/caddy run —environ —config /etc/caddy/CaddyfileExecReload=/usr/bin/caddy reload —config /etc/caddy/CaddyfileTimeoutStopSec=5sLimitNOFILE=1048576LimitNPROC=512PrivateTmp=trueProtectSystem=fullAmbientCapabilities=CAP_NET_BIND_SERVICEEnvironmentFile=/etc/environment [Install]WantedBy=multi-user.target’ | sudo tee /etc/systemd/system/caddy.service # Start the servicesudo systemctl daemon-reloadsudo systemctl enable caddysudo systemctl start caddy # Remember, when making changes to the config file, you need to run#sudo systemctl reload caddyAnd then we add this recipe to Forge. Oh, and select “Root” as the user to run it under.

One quick note, you can see that Caddy is downloaded from the Fathom CDN here. This Caddy bin file was compiled by the creator of Caddy, and includes the DynamoDB add-on. You can compile yourself though, if you’re that way inclined.

Step 5. Creating our proxy servers

It’s server time! So let’s be super clear here: these servers will run zero PHP code. They will be minimal proxy servers. I repeat, we will not be running any PHP code on these servers, they are proxy servers.

- Under Create Server (in Forge) click AWS

- Server configuration

- Any name,

- t3.small or higher

- VPC not important

- Check the “Provision as load balancer” box (keep it minimal)

- Choose a different region for each server you create

- When your servers are finished setting up, copy the IP and then load the following URL: http://[your-server-ip]/health. This will return a message if Caddy is running as expected, and this will be your health check endpoint.

Note: The only path that Caddy serves over port 80 (HTTP) Is this health check, the rest is over 443 (HTTPS).

Step 6. Our “global load balancer”

When I was struggling with load balancers, exploring Network Load Balancer, and everything else, I stumbled upon AWS Global Accelerator by luck. I had never heard of it, and I found it when I needed it. The long story short is that this service offers us a single endpoint (static IP and DNS record), but will route to multiple servers in different regions. The different region piece blew my mind. This means that if a user in the USA hit our endpoint, they’d be routed to us-east-1, whilst someone in the UK (God save the Queen) would be routed to eu-west-1. Incredible.

To do this:

- Open up AWS -> AWS Global Accelerator

- Create an Accelerator

- Choose a name and click next

- For listeners

- Ports: 443

- Protocol: TCP

- Client affinity: None

- And click next

- Add endpoint groups

- Choose the region of the 1st server you created in Forge

- Click Configure health checks

- Health check port: 80

- Health check protocol: HTTP

- Health check path: /health

- Health check interval: 10

- Threshold count: 2

- Repeat for the 2nd server you’re adding

- Click next

- Add endpoints

- For each group

- Endpoint type: EC2 instance

- Endpoint: Click the dropdown & find your server

- Click Create Accelerator

And now you go and make yourself a cup of tea or coffee. I’d personally go with tea because you’re already going to be filled with adrenaline when you see this globally distributed beast endpoint running, you don’t want to overload with stimulation. Oh, and when you first create your Accelerator, it’ll tell you that your servers are offline. Don’t worry, that’s just a phase it’s going through, and it’ll be normal once it’s fully provisioned (typically less than 5 minutes).

One side note whilst we wait is that you can get more complex with your infrastructure. When I posted my video to everyone in the Serverless Laravel Slack group, one chap said that he would provision auto-scaling EC2s behind load balancers within each region. I have absolutely nothing against this approach, you do you.

Anyway, once the accelerator is deployed, you have both Static IPs and a DNS name to use. For root level entries, you’re going to have to use a static IP. Whilst for CNAME entries, you can use the DNS entry. With Fathom, we actually created “starman.fathomdns.com” that pointed to the DNS name AWS gave us here, so we could give our users a nice-looking CNAME for them to add.

Step 7. Your first automatic SSL certificate

So choose a subdomain (e.g. hey.yourwebsite.com), and add the DNS entry. You should also add hey.yourwebsite.com as an authorized website in CaddyController (see Step 3). Once that’s done, load your website up, and you should see automatic SSL proxying through to Vapor. I do remember that in Chrome, the first run could have issues, since it didn’t seem to like the fact that the SSL was generated on-the-fly, so use multiple browsers if you have to (this is just for the first load).

Step 8. Using this with your customers

You should always run the checks at your end. When the customer adds the subdomain / domain, you should have 3 statuses on the model:

- STATUS_AWAITING_DNS_CHANGES

- STATUS_AWAITING_STATUS_CHECK

- STATUS_ACTIVE

You then want to have 2 commands that handle all of this:

- CheckDNSChanges – DNS lookup to make sure the customer has made the changes

- CheckIfEnvironmentDeployed – Hit the website over https to ensure Caddy has been able to generate an SSL certificate for the website. This is where you’d email the user letting them know their domain is ready

Remember, I have a big video walkthrough on this whole thing, and 48 other videos in my Serverless Laravel course, where I cover Laravel Vapor from beginner to advanced.

That’s all folks

I hope you love what I’ve put together here. I’d like to say thanks to the following people for their contributions to the process: Matt Holt, Alex Bouma, Mattias Geniar, Owen Conti, Chris Fidao, Francis Lavoie and so many other people.